At Superlinear, we see opportunities everywhere to empower people in their jobs. With AI, we can support employees in the more tedious parts of their day to focus on the things they love. The domain of Supply Chain or Operations Planning is no exception to this.

While gut feeling and experience are important in the domain of Supply Chain, we feel there are still a lot of aspects where AI and data-driven methodologies can lend a hand to help guide and support instinct.

“The future of Supply Chain is Intelligent” - Prof. Bram Desmet in Supply Chain Next

Here are just a couple of examples where AI could help:

Demand forecasting/demand sensing

Capacity planning

Facility location optimization

Inventory optimization

Route optimization

Sales & Operations planning

They all sound interesting, but you might still be unsure where to start or wonder if you are ready to integrate AI into your business. No problem. Let's take a look at how we handle such a project.

Many of the Supply Chain AI applications can be defined as an optimization problem. In this blog post, I will guide you through how we handle optimization projects at Superlinear. I discuss some of the methods most fit to tackle an optimization project, their respective advantages and disadvantages, and the (data) requirements they have. In the end, you will walk away with a better understanding of how to get started with AI in Supply Chain.

How do we handle a planning optimization project for Supply Chain in practice?

We see two main paradigms to tackle this kind of optimization project: creating a mathematical specification of the problem or harnessing the power of simulation. For the first, we will discuss an Operations Research approach. For the second, we look at using Evolutionary Algorithms and Reinforcement Learning.

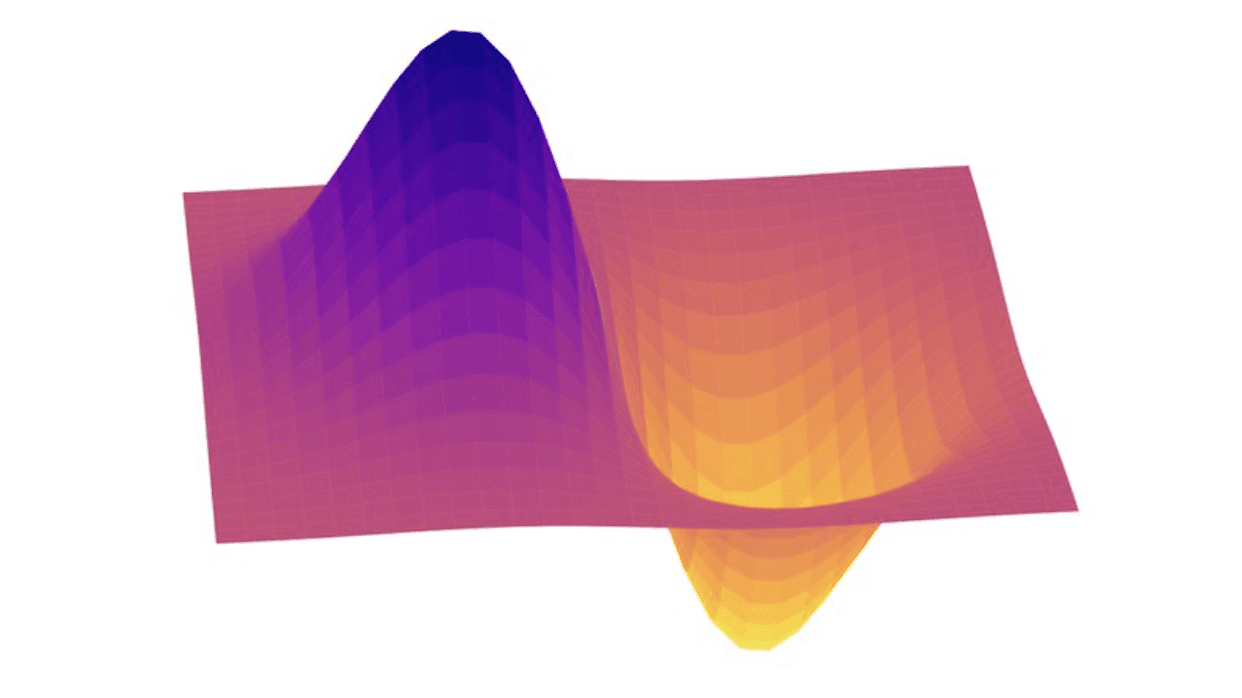

Figure 1: The illustrations in this blog post show some intuition behind different optimization techniques. This is a 3D representation of the example problem space. The horizontal plane represents the parameter space; the vertical axis constitutes the value we try to maximize for.

Creating a mathematical model: Operations Research

Operations research is a method that is popular for handling managerial and operational questions. In operations research, we formalize a problem into an objective function and a set of constraints. The objective function is the function specifying what we want to optimize, e.g. profit over a certain period, travel time... The constraints are all the limiting factors that come into play in the optimization, e.g. you can't sell more products than you have produced.

This way of working has a couple of advantages. Firstly, creating such a mathematical specification of the problem can be done based on the insights of company experts. This means it doesn't require gathering large data sets upfront. Secondly, because the problem is mathematically formalized, we can guarantee that the found solution is the most optimal.

Specifying a problem mathematically also has a couple of limiting factors. As the number of variables and constraints in the problem increases, it becomes increasingly difficult to model and solve the problem. Finally, the field of OR predates the open-source wave; most of the required software comes with heavy license fees. Luckily, we have the in-house knowledge to create solutions with the available open-source tools without any license fees.

If you want to see an example of such a project in production, take a look at this case study we did about one of our projects.

Figure 2: This 2D version of our optimization problem introduced in Figure 1 shows the intuition behind operations research. OR starts by creating an approximate mathematical specification of the function we want to optimize, hence we know the function value for the whole problem space. By adding certain constraints, the parameter space is limited. Combining both leads to finding the most optimal feasible solution.

The power of simulation: Evolutionary algorithms

When the problem becomes too large to efficiently be solved using OR it might be interesting to use the power of simulation. While creating a simulation model is also an investment, it can be easier than formalizing the problem. Furthermore, once you have a simulation model, you can iterate a lot faster and try different parameter settings to see what works best.

For real-world problems, it is likely that trying all possible parameter settings would take far too long, even in a simulation model. That is why it is important to search through the solution space intelligently. One way to do this is to borrow from nature. Evolutionary algorithms start by trying a set of random parameter settings, after each iteration, the best performing settings are selected. For the next iteration, different combinations of the best performing parameter settings are combined. This process is continued until the performance stops increasing.

Choosing the right way to combine or 'cross-over' different parameter settings for your problem will be crucial to arriving at a good solution. This has an influence both on how the parameter space is 'explored' and how we close in on a good solution with every iteration. Besides that, choosing initial parameter settings can be a great help in coming to a good solution. For example, rather than choosing a completely random initialization, we can opt to solve a simplified version of the problem with OR to limit the search space.

The main advantage of working with evolutionary algorithms and simulation, in general, is that it allows us to take on larger and more complex problems (sometimes in combination with OR). On the other hand, because the problem is no longer formalized, it won't be possible to guarantee that the found solution is the best possible one.

If you want to know more about how we used evolutionary algorithms for inventory optimization, feel free to reach out for an introduction.

Figure 3: Instead of mathematically specifying the objective function, Evolutionary Algorithms use simulation to sample the objective function spread out over the solution space. By combining the parameter settings of the best simulation runs it converges on the optimum. Note that the simulation must be run through multiple times (here 9 times) before the parameter settings are revised.

The power of simulation: Reinforcement learning

Compared to Evolutionary Algorithms, you could say that Reinforcement Learning (RL) is one level of abstraction lower. Instead of borrowing from evolution and survival of the fittest, it tries to imitate how a person learns something. Instead of trying a bunch of parameter settings and fast-forwarding through the simulation to see the result, we let an agent interact with an environment and, over time, learn which actions have the best effect in a given situation. Thanks to simulation, this does not have to take a lifetime.

Current RL methods are very powerful. For example, RL agents are the current world champion in strategic board games like Chess and Go (a board game popular mainly in Asia far more complex than chess). Also, in strategic planning video games like Dota II and Starcraft II, RL agents have beaten the top human players. This shows the ability of RL to learn intricate behavior in a situation where a lot of different actions must be taken over a long period of time to achieve a long-term goal in diverse environments.

An RL algorithm can be trained on a digital twin or simulation model of your real-world system. Although it is strictly unnecessary to have gathered actual data if the simulation model is of very high quality, I would recommend having at least some real data to fine-tune the model.

Even though a lot of progress has been made in the field of RL over recent years, it still has a reputation of being rather difficult to use in practice. This combination of being the most powerful of the three methods and most difficult makes using RL increasingly interesting with increasing problem difficulty.

I researched the use of RL for improving inventory management in supply chains in my master's dissertation at Ghent University. Feel free to reach out if you have more questions!

Figure 4: Similarly to Evolutionary Algorithms, Reinforcement Learning also samples the solution space. The difference is that for RL the changes are made within a simulation run, instead of between simulation runs. RL keeps improving on the same agent, rather than combining experience of different agents.

Conclusion

Supply Chain and Operations Management is a field where employees can get empowered a lot by AI. For each of your challenges, there is a tailored solution to take it on.

If you are not sure where to start exactly, reach out to us for a Fast Discovery. We will help you get a clearer vision of the opportunities of AI for your business.