Article

User experience design is a big part of the solution

At Superlinear, we aim to superpower people with our AI solutions. They are designed to serve the user and augment their abilities. The nature of artificial intelligence also means that we need to find new ways of designing these products and services to create a collaboration between people and AI and prevent mistakes and accidents from happening.

Three complexities of AI systems

The first complexity is the learning nature of AI. Artificial intelligence is a technology that tries to predict outcomes by learning from data. This learning dynamic is designed to mimic human cognitive processes. Meaning that as designers, our task is to shape how AI understands the world as we do with children. Since AI learns like a child, we need to build it in a way that allows it to learn from its mistakes.

Therefore, the interactions between humans and AI need to be very purposeful in both directions. Humans need to teach the AI how to do better next time, while at the same time learning to trust the AI’s decisions. All this is very similar to a toddler drawing on the walls. You know they are probably just doing this because it’s possible. So we try to give them a purpose by explaining that it will be a lot of work to get it cleaned up. In the case of the GPS system in the picture below, it could be very helpful to ask why users stop following the GPS and consider this for its next task.

A second complexity is that people, in general, are not very good at understanding statistics and predictions. Certainly not in combination with complex technological systems. This is called the ‘automation bias’ (1) and results in an excessive amount of trust in black box systems, just because they are framed as smart and intelligent. A phenomenon that is often seen when using a GPS to navigate. The recommended routes are very seldomly questioned, which can create problematic situations in combination with inaccurate predictions or recommendations.

Image source: https://www.cnet.com/news/man-followed-gps-drove-off-disused-bridge-ramp-wife

These inaccurate predictions create the third complexity of designing for AI. Today it is relatively easy to build an AI model with an accuracy of 80 to 90%; achieving these last percentages, however, is a different story that requires a lot of money, data, time, and effort. This also means that there’s always a chance that a prediction is wrong, and thus that it is very important to calibrate the user’s trust in the system. For example, a GPS navigation system could indicate if it is unsure about a route, to warn the user that they should be attentive and use their own judgment if needed.

A fault-tolerant user experience

These three complications, a dynamic of learning, an excessive amount of trust and statistical inaccuracy, can be addressed by changing how we design a user experience. Traditionally, a user experience would be designed to be as smooth and satisfying as possible to reduce friction and bounce rates. But in the case of AI products and services, it is important to create room for mistakes and feedback. Hence, both parties have the opportunity to learn how to work together and collaborate to augment the user’s abilities.

Collaboration means that there’s mutual trust between humans and AI systems. That is why we need to embrace the occasional mistakes of AI and show the system's seams. After all, we know both strengths and weaknesses of the people we trust the most. The same should be possible for a relationship between humans and AI systems. In short, a fault-tolerant UX allows to calibrate the user’s trust in the system, learn collaboratively, and compensate for the occasional overconfident prediction.

Depending on the use case, there are different methods of calibrating the user’s trust in the AI system. However, they are all based on the idea discussed above. They show the seams in the system and allow or even ask for human judgment to avoid the dreaded automation bias. We’ll introduce five of these methods that we have used in the past while developing AI projects at Superlinear.

Reference points

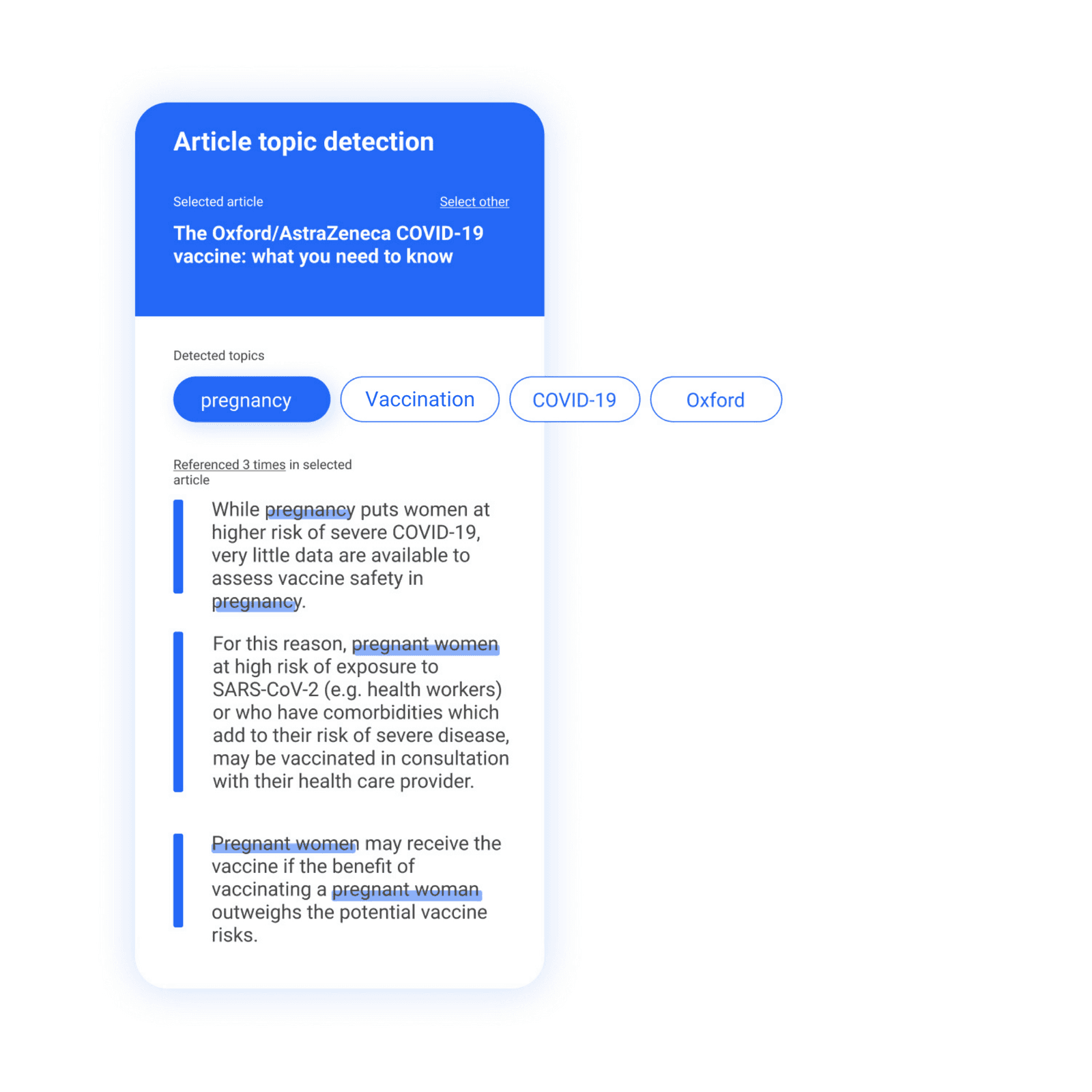

A theoretical topic detection app shows the references in the text for every topic detected.

The method of reference points consists of visually highlighting or showing the user what the AI was ‘looking at’ when it made a prediction or recommendation. This could be a highlighted piece of text or some other visual that gives the user context about how and why a prediction was made, and it allows them to use their own judgment if needed. These reference points are especially useful if the system presents conclusions to the user; it allows for a more collaborative relationship where the system says, ‘This reminds me of what I’ve learned at a certain moment in the past’.

Simulations

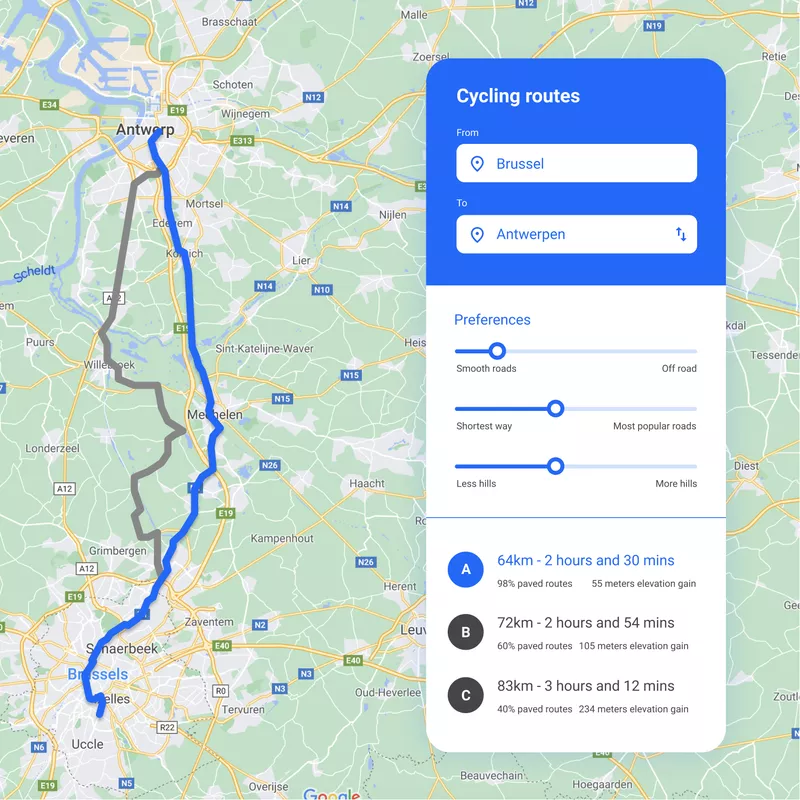

A theoretical cycling route finder lets the user simulate the possible routes by changing different parameters.

Simulations is a method that is commonly used in, for example, Google Maps. It gives the user the possibility to play around with the predictions of an AI. By moving around specific parameters, the user learns how the AI and its predictions behave and react under different circumstances. This is useful when a user wants to weigh the pros and cons of different outcomes before making a decision.

Two minds

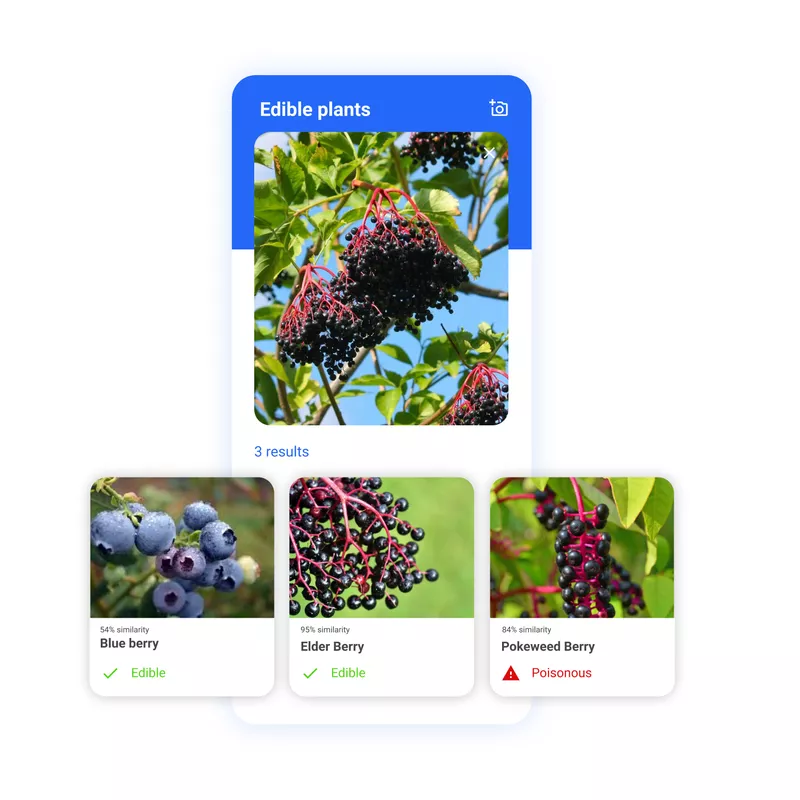

A theoretical edible plant scanner application warns the users of both sides of a prediction.

The two minds method means that both sides of a prediction are communicated. There’s a certain confidence that a prediction is right, but just as well certain confidence that it is not right. This is useful when the result of a (wrong) prediction can have a significant impact or create safety issues. It is like the AI is saying to you that ‘It might be this, but it might also be something else that we’re actively trying to avoid, so I would like your judgment on this question as well’.

Options

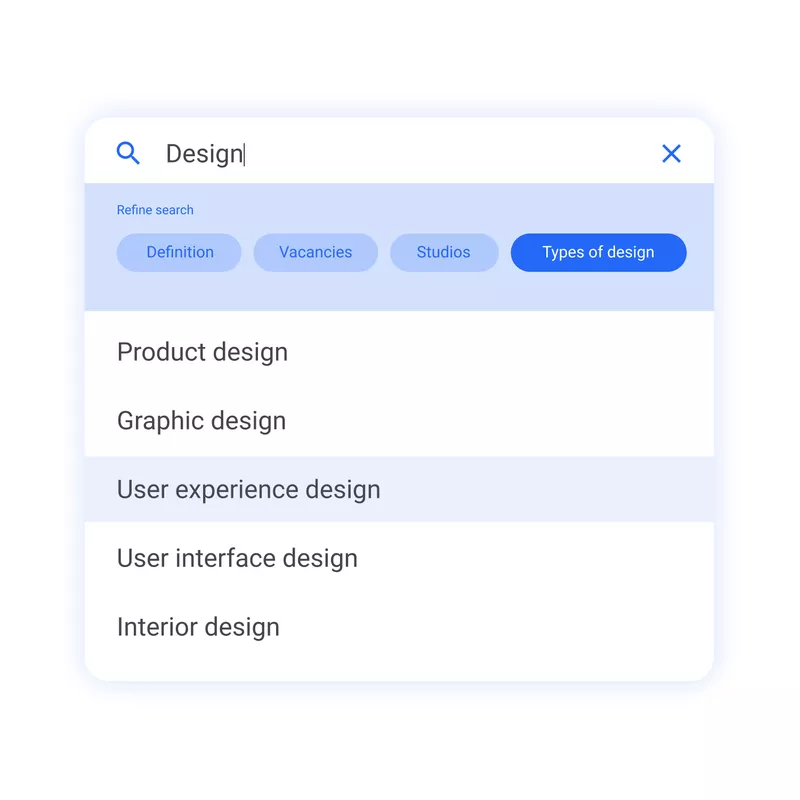

A theoretical search application indicates different contexts for a search term, giving the user options to refine his search.

Even when the AI is more confident about one result, it is good to offer multiple predictions to the user. It allows them to ponder a handful of options and use their judgment before taking action. This helps build confidence and feels like the AI is saying, ‘Look, I’ve considered multiple options, and I think this is the best path’.

Nearest neighbors

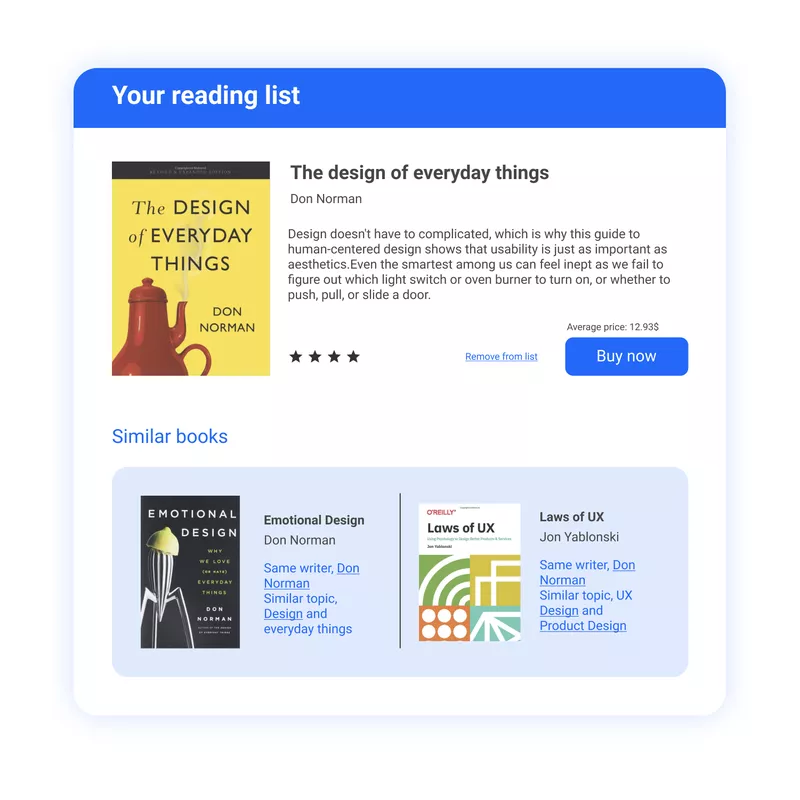

A theoretical online book shop shows books related to the topic of a recently viewed book.

When the AI presents its predictions or recommendations in a certain order, it is interesting to show the close-but-not-quite results. This indicates what the AI was looking at and feels like the AI says, ‘This is definitely the most similar recommendation, but it also reminds me of other things I’ve learned before’.

Conclusion

We’ve noticed from our first-hand experience that developing AI systems that collaborate with their users leads to a better user experience. By showing the boundaries of AI, asking for feedback or confirmation in certain predictions, adding context to others or even giving the user the ability to play around and simulate different scenarios, our users become more involved with the AI system. They are excited to try out and explore future possibilities. So by designing a fault-tolerant user experience, you will not only prevent accidents and dangerous situations, your product or service will also provide more value to its users by effectively and collaboratively augmenting their capabilities.

This shows us that by intentionally designing more collaborative AI systems instead of making them as easy-to-use or as smooth as possible, we can superpower people’s abilities and address real human needs. The possibilities to design with purpose and address these needs are immense when augmenting people’s capabilities, making for a really exciting future.

Designing a fault-tolerant user experience allowing users and AI systems to learn collaboratively is one thing, but there are other discussions to be had. A very interesting blog post on designing AI with purpose, written by Josh Lovejoy, mentions that besides learnability one also has to discuss the capacity and the accuracy of AI. This means that it is vital to know which tasks can be automated and will actually help the user and make sure you and your team know how to measure both accuracy and inaccuracies. Food for thought and future blog posts!

Sources

1) Arxiv

author(s)

Jef Willemyns