Article

In short: No! Due to the many unknowns, this question in fear of AI technology is only natural. Creations are shared without talking about what the limitations are. This leads to uncertainty about how this new technology will fit in the current industry, and what it means for your job such as a creator, designer, or art director.

This is exactly what we’ll be talking about in this post. Instead of an uninformed black-and-white view, what about a knowledgeable shade of gray with AI as your superpower?

I’ll be sharing our hands-on learnings at Superlinear, as we’ve been guiding companies in their Generative AI journey. Now let’s discover where AI could fit in your workflow. And I assure you… we’ll cover the full picture!

The cycle of wins

Creators and their clients often have multiple iterations of exchanging feedback and ideas. The client wants the result to align with its values and brand, and might already have a specific vision — “my product should look professional and sexy”. To this end, the creator provides a few alternatives the client can choose from, and uses the feedback for further development. It is in this ideation phase that AI can greatly accelerate the iteration cycle between creators and their clients. It’s a win-win really…

As a creator, the ideation process demands many time-expensive alternatives in the form of mood boards and campaign visuals to propose to a client. With generative AI, such alternatives could be generated in no time. Additionally, you can see it as your personalized source of inspiration, where others keep fishing in the same pond of Google and Dribbble. Coca-Cola’s recent AI-inspired marketing campaign is a stunning example showing how AI can superpower your inspiration!

From the creator’s client perspective, it’s hard to explicitly communicate visual ideas. Generated images can give a good reference in style and composition for the creator to work with, vastly accelerating early client briefings. As an example, the Superlinear marketing department exploits generative AI to quickly generate concepts to jump-start their design agency. The agency’s art director can now align the workflow from the start with its client’s expectations.

Generative AI models: Buy or build?

Wading through the sea of generative AI models can be overwhelming. The choice of model often comes down to your specific use case and how much you’re in need of a customizable solution. In short, it comes down to the question: Should you buy or build?

Let me break it down for you.

AI as a service (DALL-E2 and Midjourney) compared to your own AI (Stable Diffusion XL).

Buy: Generative AI as a service

First up, we have proprietary solutions in the form of AI-as-a-service, such as DALL-E2 from OpenAI, and Midjourney with a more ethereal image style — both are compared in the two leftmost figures above. As the AI-based tool is provided as a service, it typically comes with subscription fees or a cost per generated image.

These models shine in their usability by generating impressive pictures out-of-the-box with just the click of a button. AI as a service is easy to use directly in your browser, or might already be integrated into your workflow, for example in Photoshop’s generative fill.

Often some cool AI-automated editing features are included that let you change parts of the image, or even add new parts outside of the image borders. Nonetheless, they remain limited to the preset functionalities. Adding your own products or styles is not possible and you only have limited control over the generated content.

Another major concern we frequently encounter at Superlinear when road-mapping a client’s AI journey, is the dependency on a third party. You see, when they’re down, you’re down. And if they make (unannounced) changes to their AI service, there’s not much you can do.

Build: Customizable open models

The stark, opposing force to these proprietary models is open-source models that are free to use! The main example is Stable Diffusion, released by Stability.AI with the philosophy to put AI in the hands of the people to accelerate progress in the field.

With this free access, you even have the flexibility to run Stable Diffusion on your own laptop. User interfaces such as EasyDiffusion or Automatic1111 make you easily generate images without touching a line of code.

The major advantage of these open models is the opportunity to build your own unique generative AI, without being dependent on a third party. You can tailor your model to your own styles and products, or can even have multiple models for different use cases. If you read on, I’ll show you some neat examples!

Note that when building AI, the nature of your use case might also need you to consider the ‘fairness’ of your system and its potential biases. While the legal component is not the focus today, you can find a great overview in our article: Can I use AI image generators for commercial purposes.

Using General Generative AI

Now you have a better idea of when to buy or build generative AI, let’s see how to actually use it!

I’ll discuss two options that are available in basically all ‘buy’ or ‘build’ solutions: you can control it by giving it text (a prompt), or a reference image.

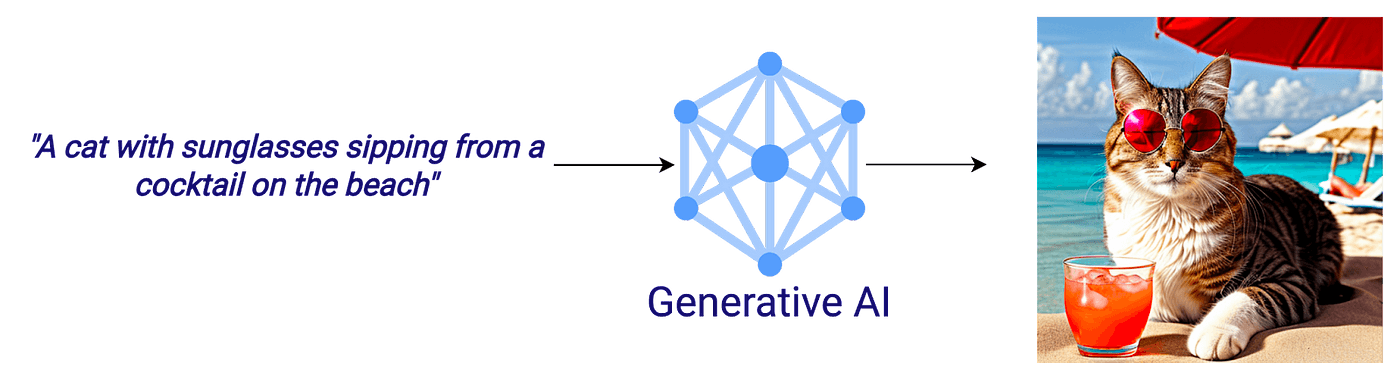

1. Controlling with text

This is the most renowned and commonly used functionality of Generative AI. It’s as straightforward as it seems — write down your idea, hit ‘enter’, and voila! The AI translates your textual idea into an image, visualizing the essence of your thoughts.

Text-to-image lets you ‘talk’ to the AI: your text prompt results in corresponding images!

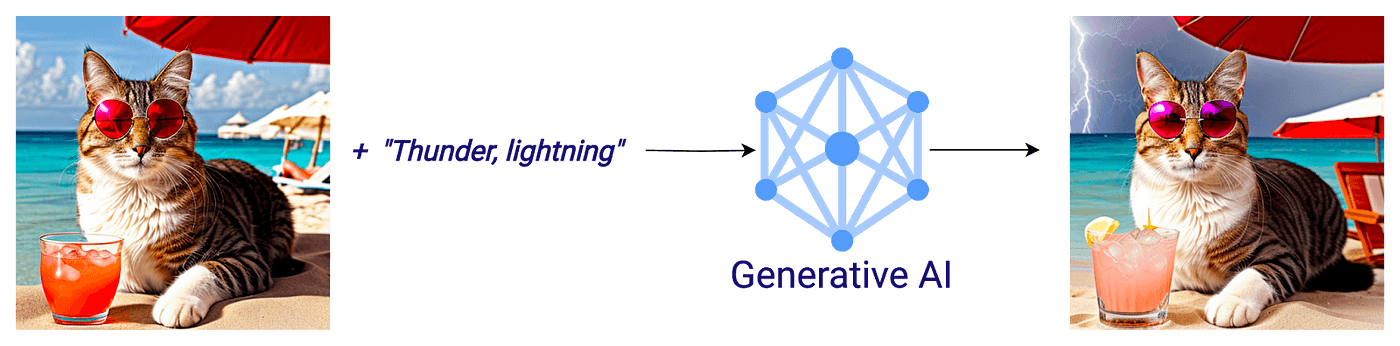

2. Controlling with a reference Image

This allows you to present a reference image and watch the AI churn out different variations, tweaking color palettes, styles, and even bringing additional objects into the picture. These variations can additionally be controlled by the text you provide.

For example in the cat-on-the-beach image below, we can let the AI generate a variation. By providing additional text to the AI, we can control the new elements to add: thunder and lightning. Notice that while this is great to get variations of a composition, it is really hard to maintain control of which parts to keep in the image. Below you can see that the scene and composition are very similar, but besides the thunder and lightning other elements change as well: the cocktail, glasses on the cat, and the beach chair in the background.

Image-to-image gives you variations in editing a reference image. Let the Generative AI go wild for inspiration, or control the process with your own text.

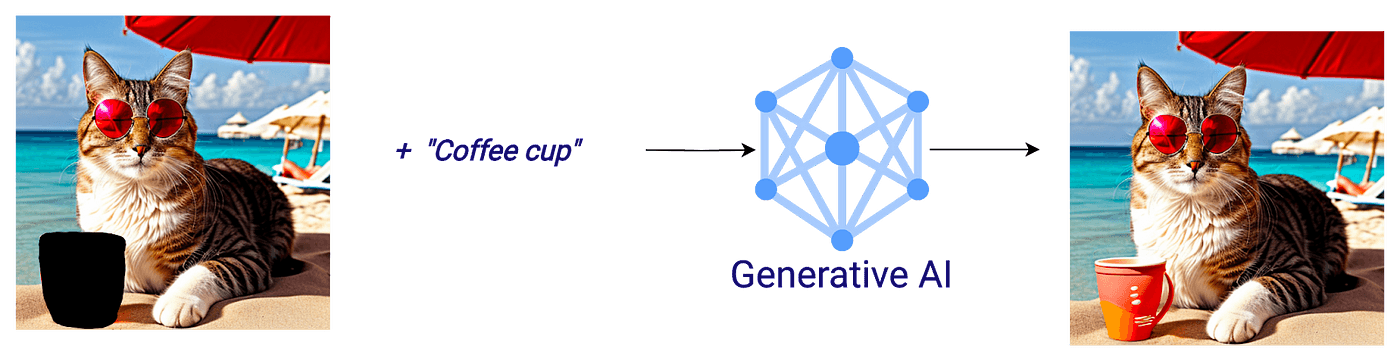

For more targeted editing for specific parts of an image, you can mark them with a paintbrush. This is called inpainting where the generative AI will now try to edit only the marked area, giving you much more control! You can use this to ditch any undesirable components (yes, including electricity cables or undesirable bypassers in your shots), or you can even conjure up new or alternative objects into the scene. If the cat should promote a coffee for your campaign instead of a cocktail, we just mark the cup and type what should come instead: a coffee cup.

Inpainting lets the AI generate local edits.

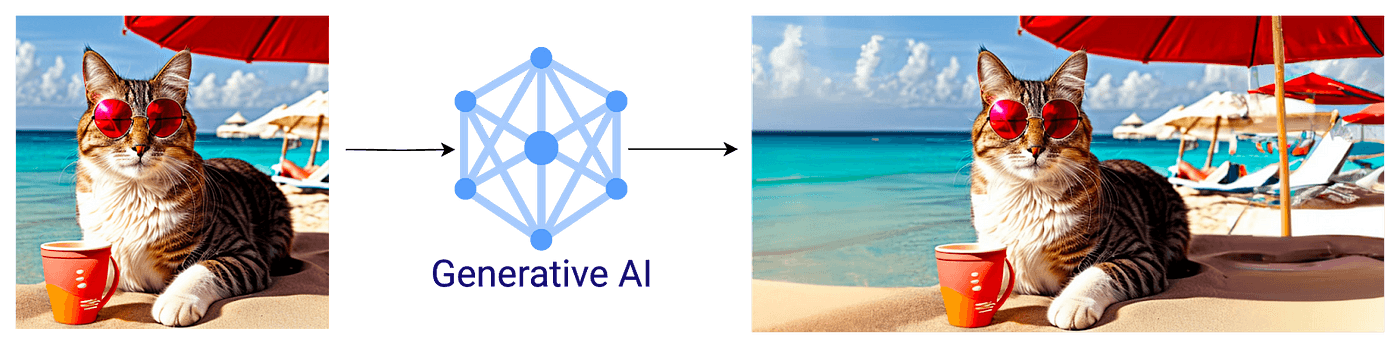

Besides inpainting, we also might want to change the aspect ratio of the cat image for all types of media in our campaign: let’s make a billboard! The AI will paint the borders, that’s why it’s called outpainting. Let’s take our image widescreen, and let the AI generate plausible borders. This can also be guided with additional text, such as “sunny beach”.

Outpainting generates a billboard version of the image.

The next level: Personalized generation

All of the above is available in basically all generative AI services (when ‘buying’). But you can see that it only generates general objects and compositions…

What if I told you that you could have client-specific generation? When ‘building’, not buying, you can have custom generations specific to your styles, and your client’s brand or product. On top of that, we can add control over the generations that goes beyond just text and image variations.

In other words, a customized solution takes you to the whole next level! I’ll show you how to (1) add your own concepts, (2) enhance your generation control, and (3) upgrade to brand-specific generation!

1. Adding your own concepts

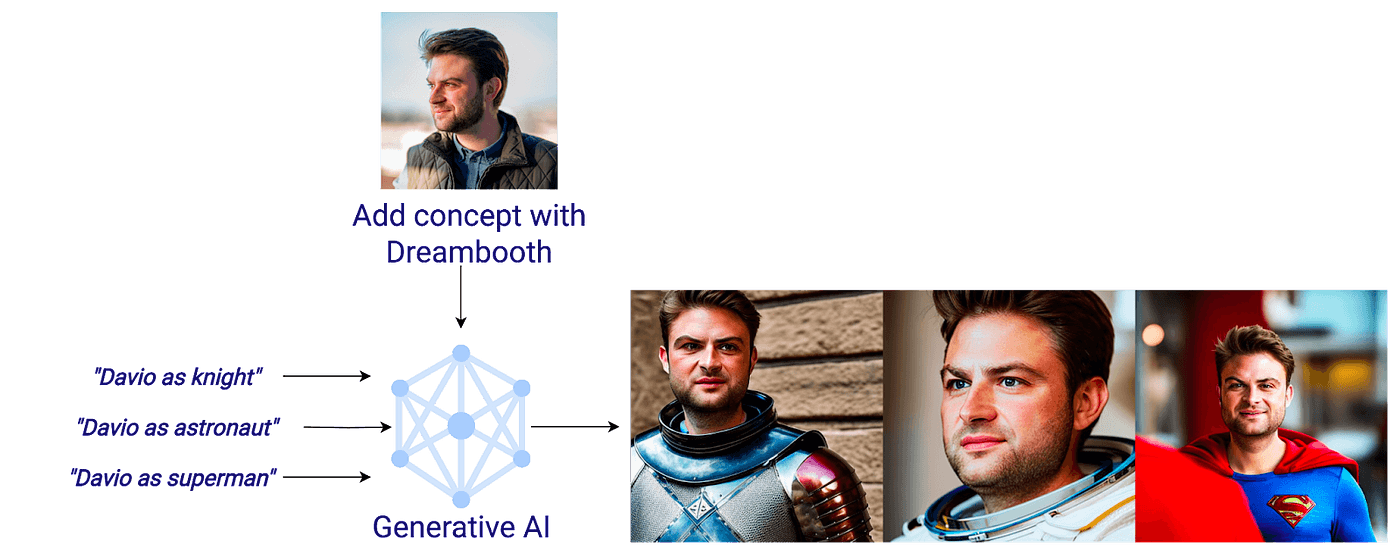

Let’s start with how to add a new concept to the generative AI. This could range from adding your favorite cup to even adding yourself. Innovative methods such as Dreambooth and Textual Inversion allow you to do this by providing just a few example images. In the image below, by collecting just around 10 pictures, we can generate Davio, Superlinear’s CEO, in different compositions guided by text.

Adding the new concept ‘Davio’ to the generative AI with Dreambooth.

2. Adding enhanced control

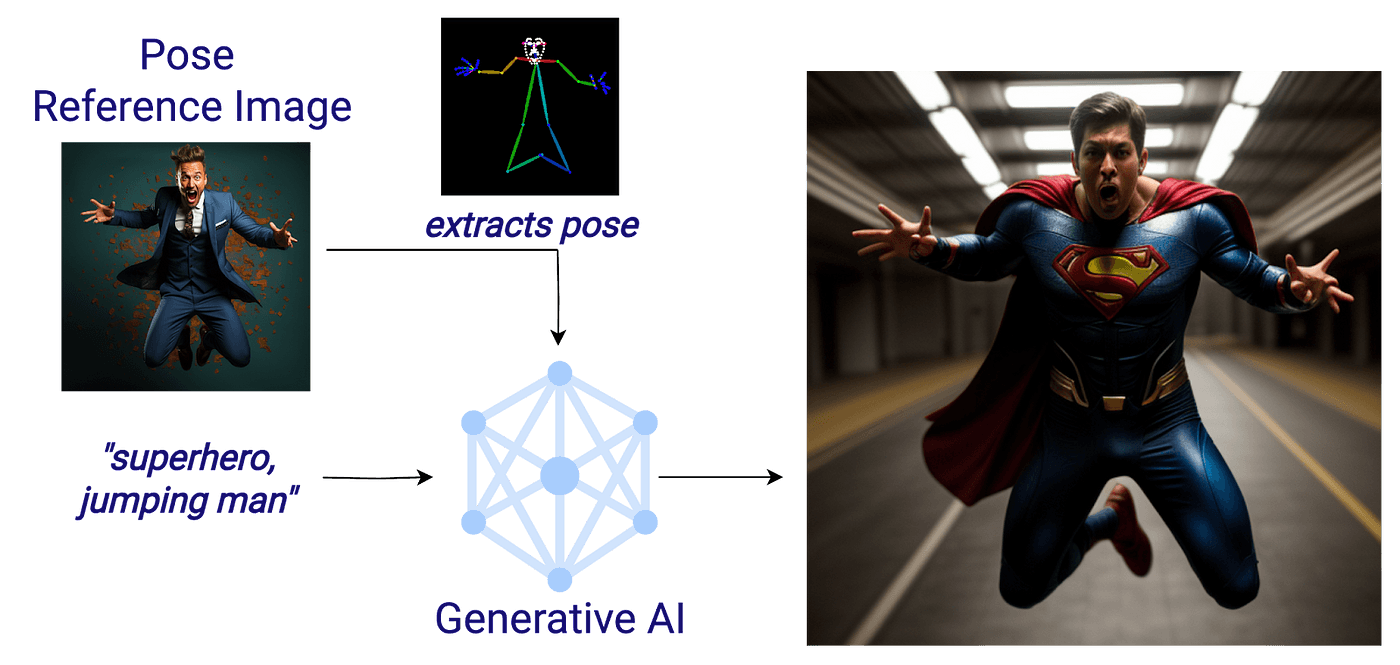

But why stop at adding concepts? Let’s dive deeper and exert more control over your creations with the latest development ControlNet. We can now use the reference image to copy the specific body pose of a person, or use its contours for your generations!

From the reference image, we can extract the pose of a person, and let the AI only generate people in the exact same pose. Just imagine how you could draft ideas before doing an actual photoshoot just yet. Let’s generate Superman making a crazy jump:

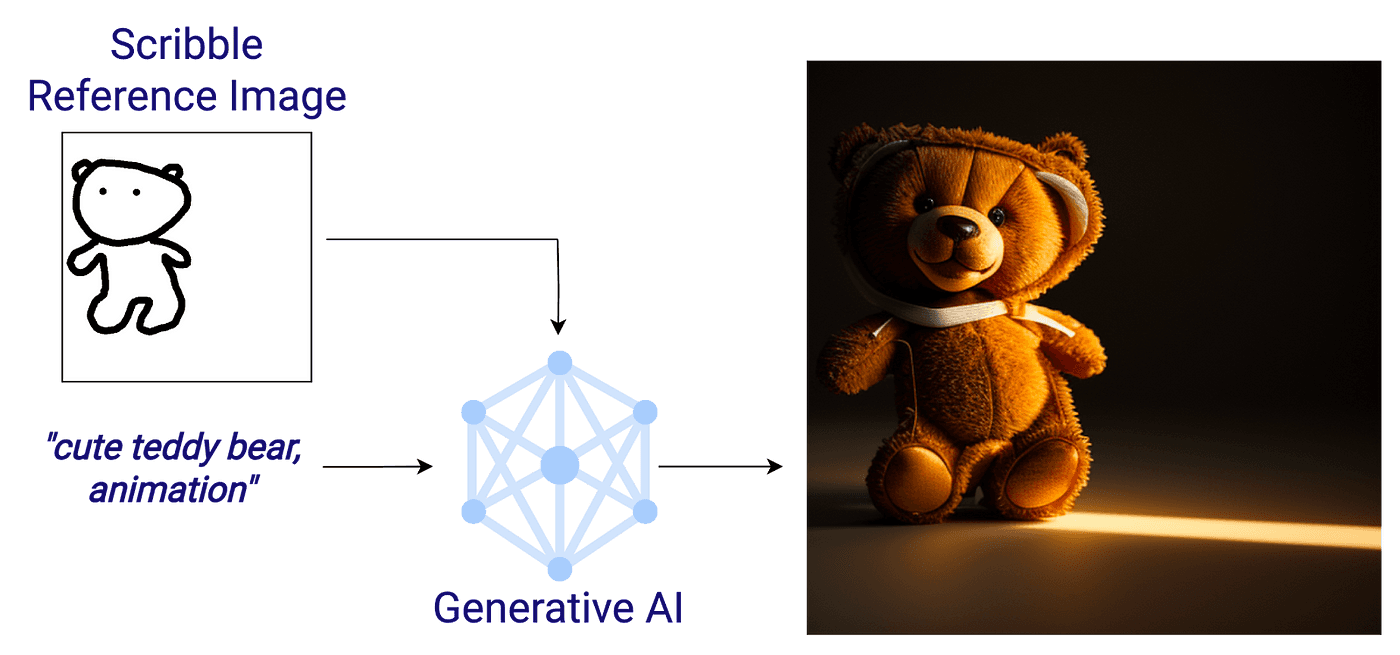

The next one I’m really excited about. Any rough sketches or scribbles you make, you can now make it come alive! Below I quickly drafted a bear in Paint, told the AI I want it to look like a cute teddy bear in animation style, and there it is. The difference with only using text? Take a look at the composition, it closely follows your drawings! Your basic concept sketches now come to reality in no time, superpowering your brainstorms and concept art.

For the talented reader that you are, please don’t judge my drawing.

3. Brand-specific generation

While non-custom models are trained with a wide range of data to please all of its users, often we only want really good results for your use case. For example, when aiming for realistic photography, we’re not interested in paintings. Luckily, by training the existing Stable Diffusion with your image examples in a specific style, we can make it an expert in generating this style! Just take a look at how this Gelato-style model generates everything as if it’s made of ice cream:

It’s an apple — in your custom ‘ice cream’ style 😮

Don’t want the hassle of training your own generative AI? Maybe someone else has already done this for you! Creators share their models on Civitai, enabling you to freely download generative AIs that are adapted specifically for realistic imagery, food photography, or… basically anything you can imagine (including the ice cream style above 🍦). These models give great examples of how you could also make a generative AI that’s producing your specific brand style. Not surprisingly, this is often a centerpiece of value for Superlinear’s AI clients.

Limitations of the tool

While we mainly focused on what’s possible with generative AI, I’ll now briefly discuss some of its limitations.

Making compositions is really hard for the AI: It can have difficulty understanding the relations between objects, and this becomes more and more challenging as more objects are in the scene. The prompt “The apple next to the basket”, may have the apple appearing in the basket instead.

Cherry picking: When you see results from generative AI models, always keep in mind that many variations were generated, and you only get to see the best ones. It may take some tries to get the desired result without artifacts — such as too many fingers or deformed faces. Luckily, you can speed this up by letting the AI generate multiple images at once.

Typography really doesn’t go hand-in-hand with generative AI: Text may occur as an artifact and often doesn’t even make up sensical words. This may result from the AI seeing such text examples in its training images, such as from ads or posters. Putting “text” in the negative prompt can avoid the artifacts. Again, we need the designer: the way to go is to add text yourself in post-processing!

These limitations really put in perspective that AI is a tool, albeit a powerful one. And it is a means, but not the end. The creator will always be controlling the creation process, wakes over the alignment with the client’s values and ideas, and chooses which parts in the ideation process generative AI can help him thrive over competitors.

Conclusion

AI is a tool. While Illustrator and Photoshop were once new technologies that are now indispensable in your workflow, Generative AI is likely to be the next one! Each tool has its strengths and limitations, and we’ve clearly seen both sides in this post. Now, where can it superpower your workflow?

While I gave some pointers in this post, there’s a lot going on in the field of AI and your requirements can be very specific to your use case. At Superlinear, we’re a partner in AI, where our expertise guides our clients to find and build the most value out of AI. If you’re excited about this topic, feel free to reach out for an exploratory call, and together we’ll find the AI tool that superpowers your company.

In the meantime, we’re actively sharing our experience from working with clients in Generative AI. As a teaser for the next blog post, we’ll see beyond the recent hype in image generation we discussed today, with an even wider range of possibilities— think next-level automated image editing.

Thanks for reading!

author(s)

Matthias De Lange

Solution Architect