Article

In our mission to achieve impactful AI, we must keep many things in mind, including the rule of law. This blog post highlights the EU AI Act, a law proposed by the European Commission that would significantly impact how businesses in Europe and worldwide use AI. While this regulation may not take effect immediately, companies should keep it in mind as they build or update AI solutions in 2022.

The new regulatory framework of the EU AI Act

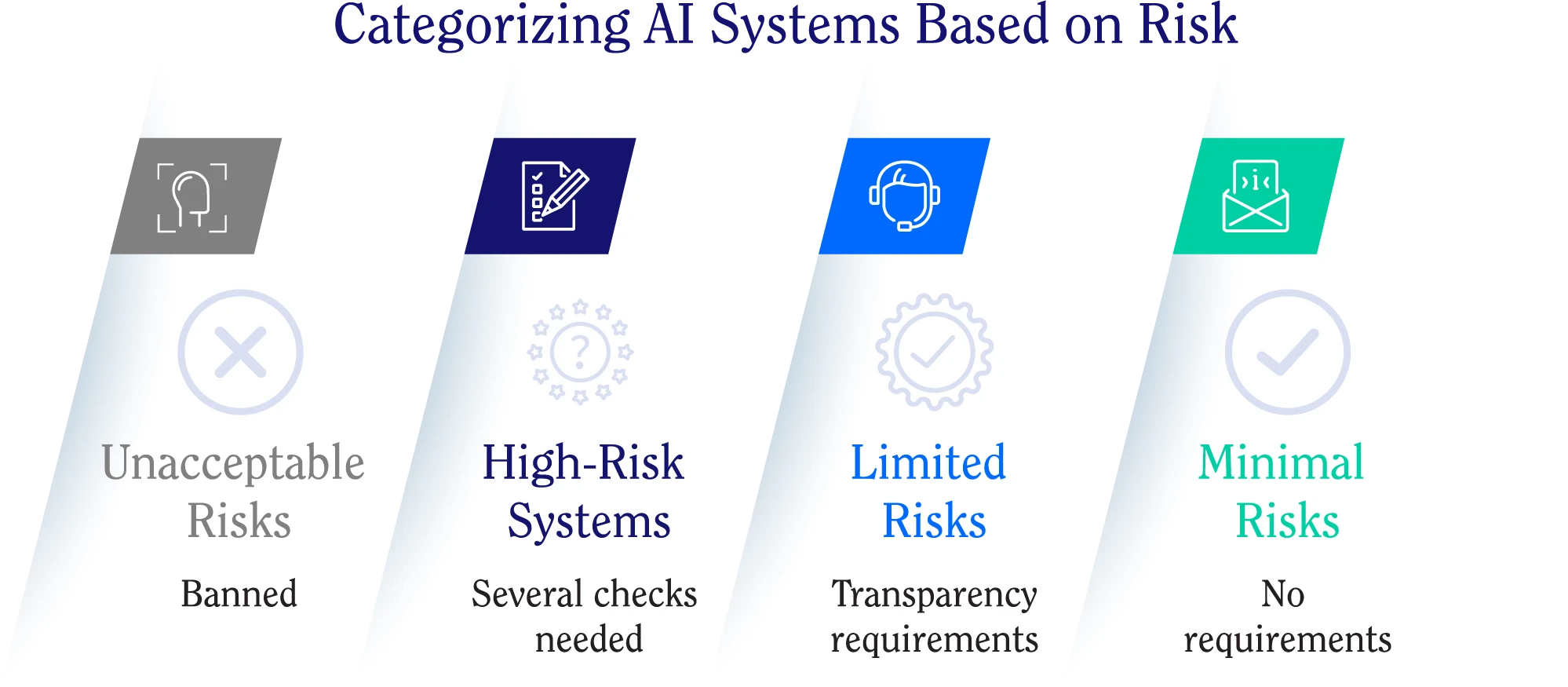

The AI Act creates a regulatory framework for AI, categorizing AI systems based on risk. Each risk category has different requirements companies must follow.

1. Unacceptable risks

The legislation prohibits the use of AI systems that compromise the safety, rights, or livelihoods of citizens. For example, social scoring (like the system currently used in China) and some forms of real-time facial recognition are deemed unacceptable under the AI Act. If your AI system is categorized as unacceptable, it becomes banned in the EU once the AI Act becomes official law.

2. High-risk systems

Risk classification is based on the intended purpose of the AI system and the potential harm it could do to the public. Here are some examples of high-risk AI systems:

Consumer credit evaluation – Automated systems for obtaining loans and lines of credit which could bar people from getting fair credit services.

Employee recruitment - Automated job application and sorting software that could discriminate applicants.

Transportation – Autonomous vehicles that could compromise the safety of citizens.

Healthcare – Surgery with AI-assisted software or robots that could lead to harmful outcomes.

There is a lot of debate about what constitutes a high-risk AI system, so this category will likely evolve. Under the proposed AI Act however, companies must complete several steps before deploying a high-risk AI system to the EU market:

Complete a conformity assessment to confirm the AI system complies with AI Act requirements.

Register the AI system in a public database of high-risk AI systems (to be set up by the European Commission).

Sign a declaration of conformity and make sure the AI system carries the CE mark.

Monitor the AI system to detect and address any new or additional risks that arise after entering the EU market.

Companies that deploy high-risk AI systems but fail to meet AI Act requirements could face hefty fines.

3. Limited risks

This category covers AI systems where the harmful effects are limited, but of which users should know about their artificial nature, such as chatbots, virtual assistants, and emotion recognition systems. AI systems in this category have specific transparency requirements they must meet. For example, an AI chatbot should have a mechanism to alert users that they are interacting with a machine instead of a human.

4. Minimal risks

This category includes AI systems that pose little or no risk to the public, such as email spam filters, customer segmentation systems, and inventory management systems. AI systems in this category have no requirements under the AI Act.

What could future EU AI legislation regulate?

Besides the new AI Act, the EU is working on a whole slew of related regulations, such as

the Data Governance Act, and the ePrivacy regulation (which would replace the ePrivacy directive as it currently stands).

The vision paper released in 2020 also suggests that other AI-specific legislation may be on its way. We're excited to see how the regulatory landscape evolves over time and will be ahead of the curve when it comes to new regulatory frameworks and compliance.

These future developments could include legal domains where legislation has never applied to AI directly, like liability.

Companies should prepare for future EU AI legislation now

In our opinion, companies must understand current legislation impacting AI and prepare for future AI regulatory updates now. They should also proactively build ethical AI solutions, since AI legislation will eventually mandate that.

We will help you build innovative and ethical AI solutions that comply with current and future laws. Driving fairness in AI for example requires making difficult choices, and we can guide you to make the right ones. We’re developing a new version of AutoML that provides fast and accurate predictions, as well as explanations for each prediction. And we can work with you on setting up a framework for effective human oversight so that you can ensure accountability when using AI.

author(s)

Joren Verspeurt