Article

Many languages, like Dutch, can form new words by endlessly adding compounds onto the word. In real-world settings, we at Superlinear have observed some very creative use of Dutch compounding in our datasets, making for a huge number of unique words. At the same time, however, modern NLP methods typically represent language one word at a time by using word embeddings. This means that the words “klantje” and “klant” (variants on “customer”) would be treated as completely separate from each other; yet, if the AI never had seen the word “klanten”, it would simply say “out-of-vocabulary word detected, I have no idea what to do!”

At Superlinear.ai, our AI knows what to do, and does not need any of the overhead typically used in the massive GPU/TPU clusters that train huge deep language models. Indeed, we don’t believe that you need to burn through the electricity requirements of a small village* just to have your AI know what “klanten” means.

*E.g., training BERT-large requires 16 TPUs on Google’s cloud servers to be training the model for 4 days straight.

A typical NLP problem in Belgium

As a Belgian machine learning company, we typically deal with real-world datasets that can contain up to four languages at once (Dutch, French, German, and English). Yet, at the same time, it is desirable for your NLP system to be language agnostic; consider the example of recommending a candidate for a job:

Candidate resumé

Description: Experienced Python programmer and highly qualified AI practitioner who can implement new, state-of-the-art artificial intelligence algorithms for natural language processing and a large variety of machine learning problems.

Job opening

Omschrijving: We zijn op zoek naar top notch software ingenieurs met expertise in artificiële intelligentie en NLP. Engelstaligen en Nederlandstaligen zijn van harte welkom!

If you don’t have a word embeddings that represent “artificial intelligence” as semantically equivalent to “artificiële intelligentie”, your recommender system will miss out on showing the job opening to this excellent candidate! Indeed, it is possible that your model would predict that “artificiële” is out-of-vocabulary (OOV), which means it would ignore this word.

Out-of-vocabulary? No problem!

The word “engelstaligen” (“English-speakers”) is out-of-vocabulary on ConceptNet; i.e., we don’t have a word embedding for it, meaning that a standard AI system cannot create a representation for the word. Indeed, in NLP, we are often confronted with new words that our algorithm never observed during training — we call this the “out-of-vocabulary” (OOV) problem.

In Belgium, with 4 de facto languages, we aren’t surprised when we find that 90% of our real-world data is OOV! Yet, to understand a job description and give a recommendation, it helps when you know the meanings of more than 10% of the words. So, to generate value for businesses, it is quite important that we know how to deal with multilingual OOV words with precision and speed. Indeed, our solution will know the meaning of 100% of the words, as we describe later.

While Facebook’s FastText algorithm exists to solve the OOV problem, Facebook has not (to our knowledge) released multilingual-aligned embeddings that would help solve our typical Belgian NLP problem above. Fortunately, the creators of ConceptNet have released a large dataset of retro-fitted and multilingual-aligned word embeddings that greatly assist with this problem. Using those embeddings, you will find that “artificiële intelligentie” indeed has a very similar vector representation to “artificial intelligence”, which is exactly what we would need for our AI-powered job recommender to give the recommendation!

Below, we will go over three solutions to the OOV problem: a simple string matcher, a deep contextualised word embedder, and compact subword embedding decomposition. We have found the first to be overly simple, the second to be overly complicated, and the third, our novel implementation and solution to the OOV problem, to be the best for our use-cases. Feel free to skip ahead to our results (Conclusion).

Solution 1: String Matching (too simple!)

The most simple way to “solve” the OOV problem is to have your program say: “If I see a word I’ve never seen before, look at my known words and see which one has the most similar spelling to it, then replace the OOV word with this most similar word.”

This can be a very useful solution when you are confronted with misspelled words; e.g., this system would correctly map “programmign” to “programming”, which is a useful first step. However, this can result in very incorrect mappings too: consider the Dutch word “lasser” (“welder”) — if “lasser” were not seen during training, it might predict the English word “laser” as the most similar word instead — definitely not the same thing!

Lastly, a big problem with this method is that doing string comparisons to hundreds of thousands of known words tends to be quite slow; we’ve found that this is a bottleneck of roughly 100,000 characters/second, which is not fast enough for real-world AI solutions.

Solution 2: Deep contextualized embedding (too complicated!)

Deep contextualized embeddings tend to be the “hot topic” in NLP research and popular NLP culture right now. This is because big neural networks like BERT, ELMO, GPT-2, etc., have all been able to obtain state-of-the-art results on many NLP tasks. Seems great, right?

The problem with these deep language models is that they aren’t very practical in real-world business settings, at least currently:

First, they require huge amounts of resources to train these models (16 TPUs, 64GB each, trained for 4 days with BERT).

Second, even if you use a pre-trained model, you essentially are locked-in to renting Google cloud TPUs to perform inference at running time due to the fact that these models require a 64GB TPU/GPU to even fit into memory.

Third, and perhaps most importantly, these models are quite slow; even the most well-optimized ones can’t break 20,000 characters per second for one GPU with medium-length sentences.

This is not to mention the latency problem of sending and receiving large queries to and from the Google Cloud service and its 64GB deep neural network language model… All just to determine the meaning of the word “klanten” or “engelstaligen”!

Solution 3: Compact subword embedding decomposition (just right!)

At Superlinear.ai our engineers stay up-to-date on recent advances in NLP technology. One paper from NAACL 2019 has completely flown under the pop-NLP radar: an excellent work produced at the world-famous Japanese Riken Institute called Subword-based Compact Reconstruction of Word Embeddings.

The small, budding field of work in NLP on “subword-based reconstruction” of pre-trained embeddings is not well-known, but is elegantly simple. The basic idea is that, given a word embedding for, say, “klantje”, we should be able to decompose it into subword embeddings that sum together to reproduce the original embedding. That is to say:

A word should be equal to the sum of its parts.

Indeed, we can train new embeddings with this objective to learn a new embedding for each subword on the left side:

k + l + a + n + … + nt + tj + je + … + kla + … + klan + lant +… ~= klantje

Technically, this is a simple weighted least-squares optimisation problem that can be solved in less than an hour on a single CPU (compare to 4 days on 16 TPUs!). After that, you will have all of your subword embeddings and will be able to make a unique high-quality representation for any new word.

Conclusion: results from our solution

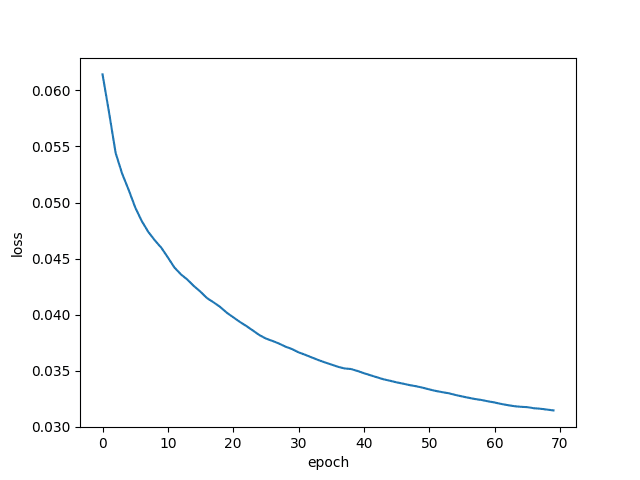

The paper mentioned above inspired us to incorporate new ideas into the subword-decomposition technique for our use-case, using some very technically specific hashing tricks and a special weighting on the loss function. We train the subword embeddings very quickly, in less than an hour on a single CPU, and we observe a very stable learning curve.

Recall that we are doing subword decomposition on the ConceptNet embeddings mentioned above, which is very useful because it means the subword embeddings will contain the multilingual information we require! We observe in the header image of this blog post, and in the GIF below, that the model makes high quality embeddings for out-of-vocabulary words across multiple languages (English, French, Dutch).

Example subword-based embeddings for OOV words around the Dutch word “muzietheorie” (“music theory”).

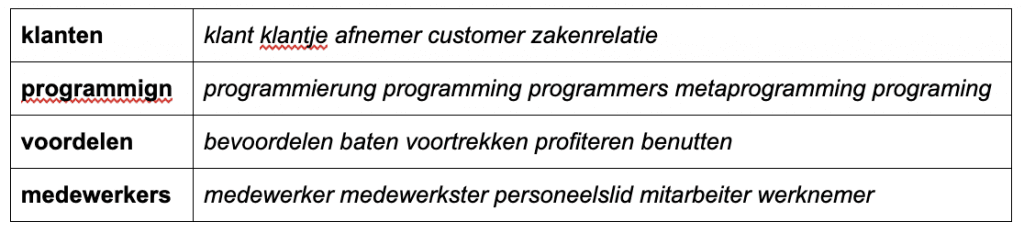

Here are some examples of word embedding similarities; on the left is an example word that is OOV for ConceptNet, and on the right are the most similar words to the subword-reconstructed word embedding for the OOV word:

And, last but not least, for “hottentottencircustentententoonstelling” we get the following most similar words: tentoonstelling, expositie, exposition, expositions, vertoon. Not bad!

After the embeddings are trained, we also get very high inference speed! We’ve calculated that our method, starting from raw text, produces word embeddings at a rate of 1 million characters per second using a single CPU.

If researchers are interested in using our solution, we will consider open-sourcing this project in the future.

author(s)

Kian Kenyon-Dean